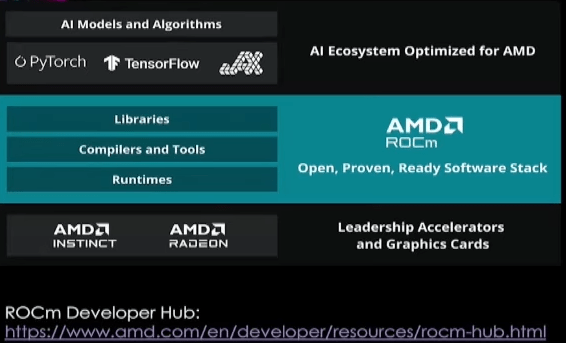

AMD ROCm™ is an open software stack including drivers, development tools, and APIs that enable GPU programming from low-level kernel to end-user applications. ROCm is optimized for Generative AI and HPC applications, and is easy to migrate existing code into.

In this article I’m going to be talking about a lot of tech specs about ROCm infra and how useful it is for your AI workloads.

What actually is ROCm?

ROCm is a collection of software compilers libraries and and run times that accelerate a Ai and math computation. if you’re thinking about CUDA the actual AMD equivalent to CUDA is HIP so HIP i like a C++ Library um but it’s really hard to program in HIP unless you understand like you know multistream processors warps and threads you generally don’t want to be programming in HIP and thankfully we do have the AMD software stack.

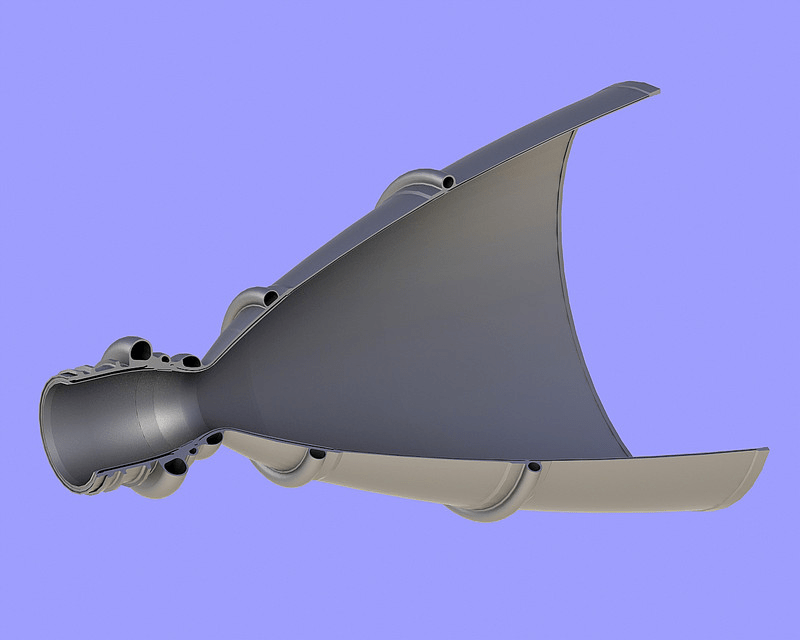

if you look at the image, you have at the very bottom the AMD Instinct and radon GPUs and then you have this middle layer which is the ROCm software stack and that takes care of actually interfacing with the GPU and then you have our favorite AI Frameworks like generally if you’re somebody that uses Pytorch, TensorFlow or Jacks luckily the developer community at Pytorch and companies like hugging face work with roam so that you don’t have to make everything work seamlessly.

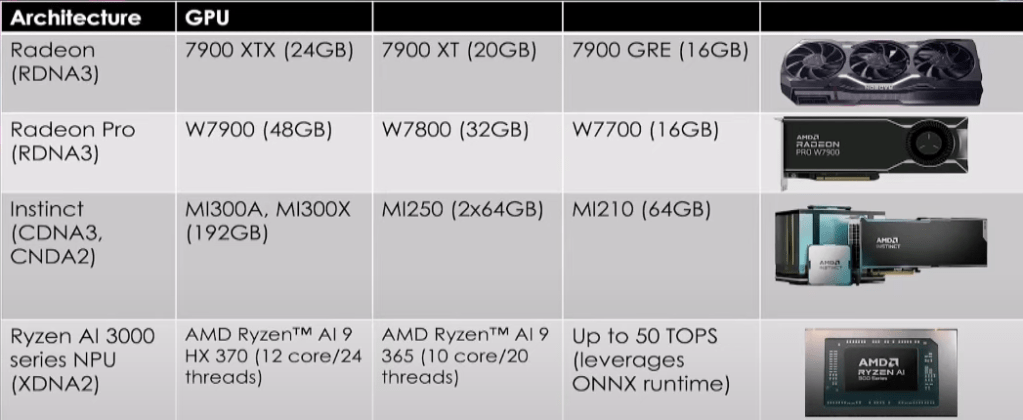

AMD Hardware

There’s really two different types of AMD GPUs, there is also the Ryzen AI new CPUs that have just been announced at computex that kind of run on MPUs(Not discussed here). But really there’s two classes of GPUs you have the server or Instinct class GPUs which run on the CDNA architecture and then you have the radon and radon Pro GPUs which run on RDNA 3, you’ll sometimes hear this referred to as navi3 and you’ll see with the software support depending on what architecture you’re running you’ll find things work or don’t work and probably the the GPUs that are getting the most hype right now are the Mi 300X those are really cool GPUs. They’re kind of like a a GPU die that you see in this image below and you either have air cooling or water cooling but they can run and they can pretty much compete hand in and with the Nvidia h 100s. The GPUs that you are probably more familiar with are you know the gamer or consumer glass G{Us those are the 7900 XT, XTX and also the GRE those are supported on ROCm and then of course you have the radon Pro GPUs, and that one comes with 48 gbt of vram so you can pretty much load all kinds of models even the largest 70b models on the GPU!!

Software Compatibility

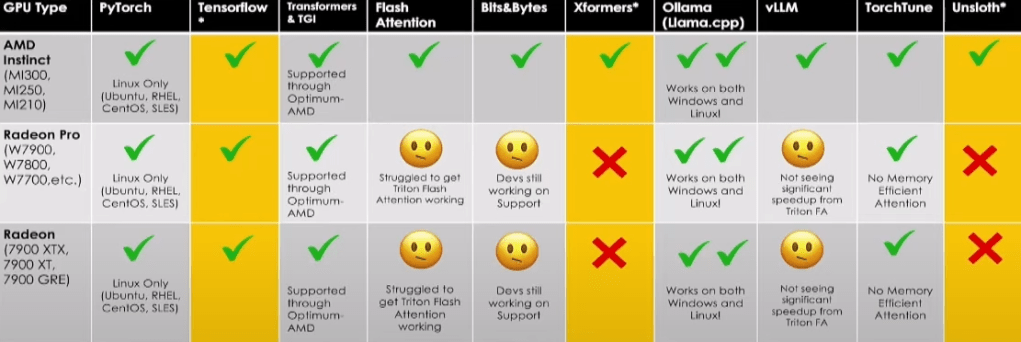

Pytorch fully supports all the different radon radon Pro and AMD Instinct gpus and for for things that you’re most familiar with Pytorch and tensorflow they of course work out of the box the only thing being is that with pytorch currently you have to be on Linux so that generally is Ubuntu or red hat Enterprise Linux or Suzie Linux Enterprise. I didn’t actually test myself this is from the developer Community people have reported things like with X formers or UNS sloth they don’t actually work on the Radon Pro Ron GPUs and then of course you have the Transformers library and text generation inference I’m happy to report that fully works on all of the AMD GPUs, the radon Pro and Instinct GPUs that have listed in the iage below. Thankfully the people at hugging face have been working really hard on getting the Transformers library to work on AMD using the optimum AMD Library and if you follow their hugging cast episode they released one on YouTube where they kind of go through uh text generation inference running on the Mi 300X GPU so you could definitely watch that.

Some time back AMD dropped a video on their YouTube basically explaining that the newest version of rock M 6.1 now does support WSL Linux on Windows, that’s really awesome to see because we’re going to probably move very soon to actually getting Pytorch on ROCm working on Windows uh and I hope that kind of goes to show you guys the the kind of effort that’s being put behind this the AMD engineers and the Wider developer Community is working really hard to get uh AI workloads running on GPUs

Leave a comment